Abstract

Recent advances in Vision-Language-Action models (VLAs) have expanded the capabilities of embodied intelligence. However, significant challenges remain in real-time decision-making in complex 3D environments, which demand second-level responses, high-resolution perception, and tactical reasoning under dynamic conditions. To advance the field, we introduce CombatVLA, an efficient VLA model optimized for combat tasks in 3D action role-playing games(ARPGs). Specifically, our CombatVLA is a 3B model trained on video-action pairs collected by an action tracker, where the data is formatted as action-of-thought (AoT) sequences. Thereafter, CombatVLA seamlessly integrates into an action execution framework, allowing efficient inference through our truncated AoT strategy. Experimental results demonstrate that CombatVLA not only outperforms all existing models on the combat understanding benchmark but also achieves a 50-fold acceleration in game combat. Moreover, it has a higher task success rate than human players. We will open-sourcing all resources, including the action tracker, dataset, model weights, training code, and framework implementation.

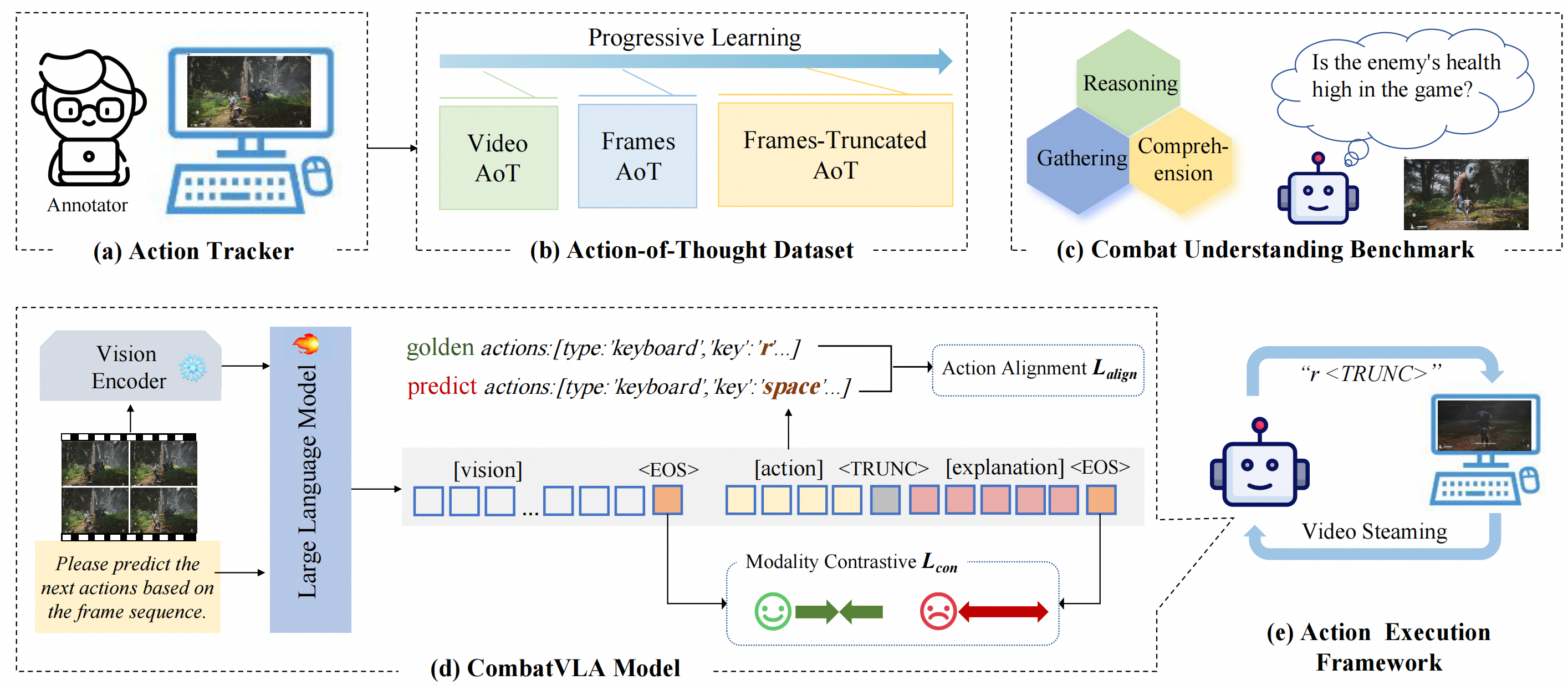

Pipeline of CombatVLA

(a) An action tracker collects human data on keyboard and mouse use. (b) Three types of AoT training data collected by the action tracker are used for progressive learning.

(c) Combat understanding benchmark (namely CUBench) assesses the model's combat IQ in three areas: gathering, comprehension, and reasoning.

(d) CombatVLA model is trained on AoT data with the constraint of action alignment loss and modality contrastive loss. (e) Deployment of CombatVLA to operate real PCs.